Gradient Descent (UDA)

-

class gd4cgp : public pagmo::not_population_based

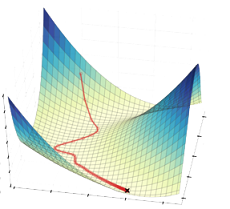

Gradient descent for a Cartesian Genetic Program.

In a symbolic regression problem, models parameters are typically present in the form of ephemeral constants (i.e. extra input terminals). The actual values of the constants have a profound effect on the resulting loss and its an open question how to balance the learning of the model parameters (continuous optimization) with learning the model itself (integer optimization)

In this class we provide a simple gradient descent algorithm able to tackle :class:

dcgp::symbolic_regressionproblems The gradient descent will only modify the continuous part of the chromosome, leaving the integer part (i.e. the actual model) unchanged.Public Types

-

typedef std::tuple<unsigned, unsigned long long, unsigned long long, double, double, double> log_line_type

Single entry of the log (iter, fevals, gevals, gradient_magnitude, lr, best)

-

typedef std::vector<log_line_type> log_type

The log.

Public Functions

-

inline gd4cgp(unsigned max_iter = 1u, double lr = 1., double lr_min = 1e-3)

Constructor.

Constructs a stochastic gradient descent for use with a cgp::symbolic_regression UDP that has any strictly positive number of ephemeral constants

- Parameters

max_iter – maximum number of iterations.

lr – initial learning rate.

lr_min – minimum learning rate.

- Throws

std::invalid_argument – if lr_min is not in (0, lr)

-

inline pagmo::population evolve(pagmo::population pop) const

Algorithm evolve method.

Evolves the population for a maximum number of generations

- Parameters

pop – population to be evolved

- Throws

std::invalid_argument – if a dcgp::symbolic_regression cannot be extracted from the problem

std::invalid_argument – if no ephemeral constants are detected in the model.

std::invalid_argument – if the number of objectives is not 1.

- Returns

evolved population

-

inline void set_verbosity(unsigned level)

Sets the algorithm verbosity.

Sets the verbosity level of the screen output and of the log returned by get_log().

levelcan be:0: no verbosity

>0: will print and log one line each

levelgenerations.

Example (verbosity 1):

Gen is the generation number, Fevals the number of function evaluation used, Gevals the number of gradient evaluation used, lr is the current learning rate, or line search width and Best is the best fitness found.Iter: Fevals: Gevals: grad norm: lr: Best: 0 0 0 0 1 9349.95 1 1 1 4180.21 1.5 7390.53 2 2 2 308.04 0.375 7390.53 3 3 3 308.04 0.5625 7386.77 4 4 4 312.186 0.140625 7386.77 5 5 5 312.186 0.210938 7353.28 6 6 6 169.472 0.316406 7335.08 7 7 7 186.938 0.474609 7320.24 8 8 8 269.803 0.118652 7320.24 9 9 9 269.803 0.177979 7295.06 10 10 10 168.844 0.266968 7271.23- Parameters

level – verbosity level

-

inline unsigned get_verbosity() const

Gets the verbosity level.

- Returns

the verbosity level

-

inline std::string get_name() const

Algorithm name.

- Returns

a string containing the algorithm name

-

inline std::string get_extra_info() const

Extra info.

- Returns

a string containing extra info on the algorithm

-

inline const log_type &get_log() const

Get log.

A log containing relevant quantities monitoring the last call to evolve. Each element of the returned

std::vectoris a gd4cgp::log_line_type as described in gd4cgp::set_verbosity().- Returns

an

std::vectorof gd4cgp::log_line_type containing the logged values.

-

typedef std::tuple<unsigned, unsigned long long, unsigned long long, double, double, double> log_line_type