Gradient Descent (UDA)

- class dcgpy.gd4cgp(max_iter=1, lr=1., lr_min=1e-3)

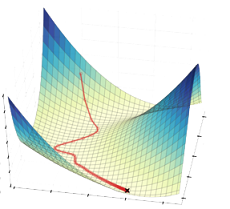

In a symbolic regression problem, models parameters are typically present in the form of ephemeral constants (i.e. extra input terminals). The actual values of the constants have a profound effect on the resulting loss and its an open question how to balance the learning of the model parameters (continuous optimization) with learning the model itself (integer optimization).

Note

GD4CGP is tailored to solve

dcgpy.symbolic_regressionproblems and will not work on different types.In this class we provide a simple gradient descent algorithm able to tackle

dcgpy.symbolic_regressionproblems. The gradient descent will only modify the continuous part of the chromosome, leaving the integer part (i.e. the actual model) unchanged.- Parameters

gen (

int) – maximum number of iterations.lr (

float) – initial learning rate (or step size).lr_min (

float) – stopping criteria on the minimum value for the learning rate (or step size).

- Raises

unspecified – any exception thrown by failures at the intersection between C++ and Python (e.g., type conversion errors, mismatched function signatures, etc.)

ValueError – if lr_min is smaller than 0, or larger than lr

- get_log()

Returns a log containing relevant parameters recorded during the last call to

evolve(). The log frequency depends on the verbosity parameter (by default nothing is logged) which can be set calling the methodset_verbosity()on analgorithmconstructed with agd4cgp. A verbosity ofNimplies a log line eachNiterations.- Returns

at each logged epoch, the values

Gen,Fevals,Current best,Best, where:Gen(int), generation number.Fevals(int), number of functions evaluation made.Gevals(int), number of gradient evaluation made.grad norm(float), norm of the loss gradient.lr(float), the current learning rate.Best(float), current fitness value.

- Return type

listoftuples

Examples

>>> import dcgpy >>> from pygmo import * >>> >>> algo = algorithm(dcgpy.gd4cgp(4, 0.1, 1e-4)) >>> X, Y = dcgpy.generate_koza_quintic() >>> udp = dcgpy.symbolic_regression(X, Y ,1,20,21,2, dcgpy.kernel_set_double(["sum", "diff", "mul"])(), 1, False, 0) >>> pop = population(udp, 10) >>> algo.set_verbosity(1) >>> pop = algo.evolve(pop) Iter: Fevals: Gevals: grad norm: lr: Best: 0 0 0 0 0.1 4588.6 1 1 1 687.738 0.15 4520.41 2 2 2 676.004 0.225 4420.33 3 3 3 658.404 0.3375 4275.16 4 4 4 632.004 0.50625 4068.54 Exit condition -- max iterations = 4 >>> uda = algo.extract(gd4cgp) >>> uda.get_log() [(0, 0, 0, 0.0, 0.1, 4588.5979303850145), ...

See also the docs of the relevant C++ method

dcgp::gd4cgp::get_log().